One PR review burned half a million tokens in an infinite loop. And even after we fixed that, our failover infrastructure was silently destroying our cache hit rates.

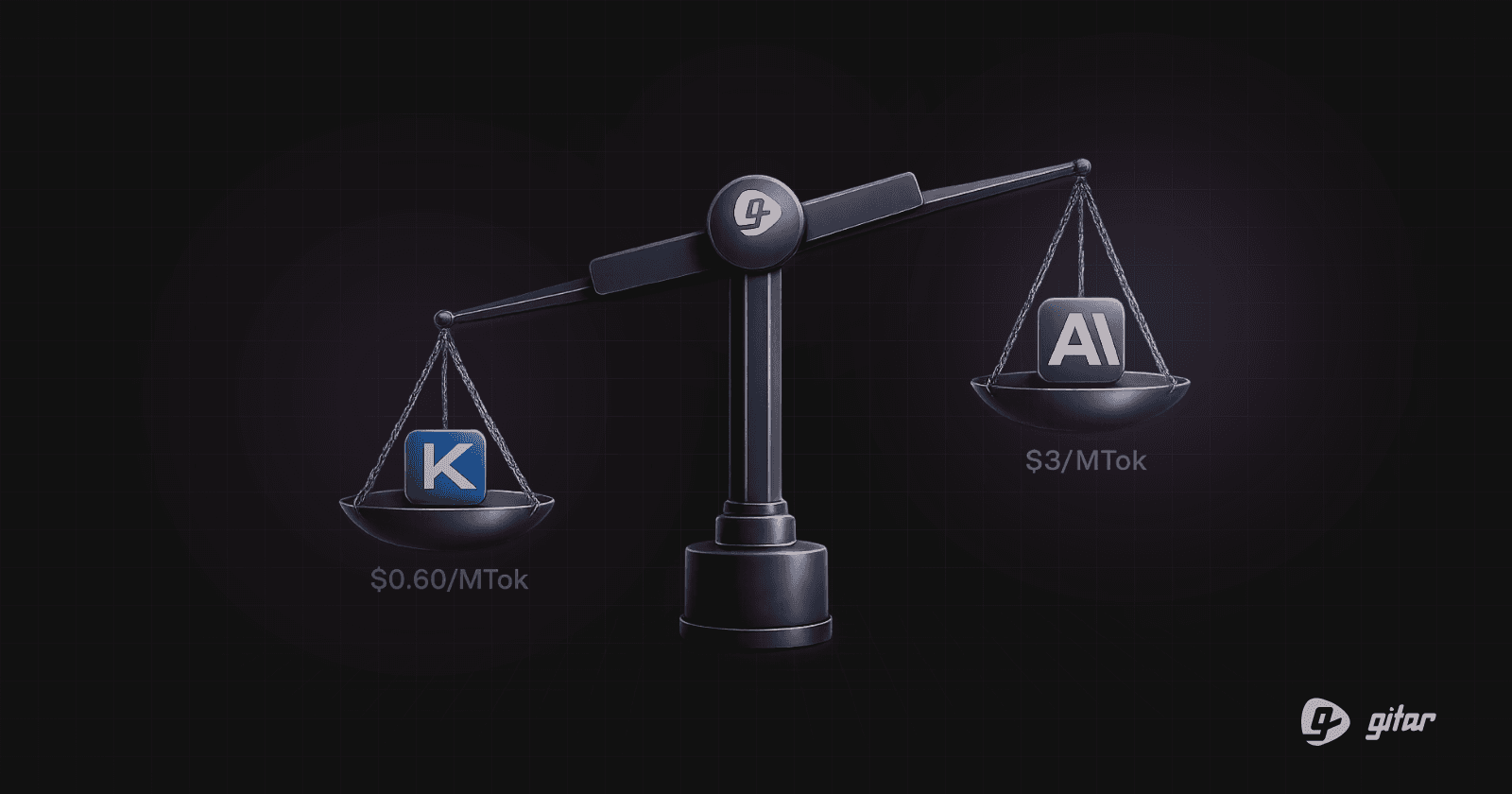

Kimi K2.5 at $0.60/MTok vs Sonnet at $3/MTok. We wanted to see what it could do on a real workload over a weekend. Gitar runs multiple specialized AI agents on every code change. They review code, fix CI failures, execute custom repository rules as workflows, and respond to developer feedback in-thread. That's easily 50-100 LLM calls per PR, and complex ones can hit 500+. We built our agent harness from the ground up in Rust, which gives us full control over the orchestration loop, provider routing, and failover behavior. We set up Kimi as our primary orchestration model on staging with Claude as failover, and pointed real traffic at it.

Tokens per outcome, not tokens per dollar

Per-token pricing matters less than how many tokens it takes to finish the job.

But our agent got stuck in a loop. It would request a tool call, not receive the result (more on why below), and retry. One session burned roughly half a million tokens before we killed it. With ballooning input context on each iteration, the actual cost exceeded what Claude would have charged for a clean single-pass completion. And the review never finished.

Kimi also burned more tokens per task. We ran both models with reasoning tokens minimized: thinking off for Claude, low budget for Kimi. Without that reasoning overhead, Kimi's self-correction was noticeably worse. It would retry the same failing call identically rather than trying an alternative path:

[attempt 1] read_file("src/main.rs") → not found

[attempt 2] read_file("src/main.rs") → not found

[attempt 3] read_file("src/main.rs") → not found

A model that reliably completes in one pass at a higher per-token rate will almost always be cheaper than one that occasionally spirals. The metric that matters is cost per successful outcome.

"OpenAI-compatible" is a wire format, not a behavior contract

Most LLM providers advertise OpenAI-compatible APIs, which suggests swapping models is a config change. In practice, "compatible" covers the request/response shape but not the behavioral details.

Our agent loop checked finish_reason to decide what to do:

match choice.finish_reason.as_deref() {

Some("stop") => {

break; // Model is done

}

Some("tool_calls") => {

let results = self.execute_tools(tool_calls).await?;

messages.push(/* tool results */);

continue;

}

_ => { /* handle other cases */ }

}

The assumption: finish_reason=stop means the model has nothing more to do. finish_reason=tool_calls means it wants tool execution.

With Claude, this holds. With Kimi, it doesn't. Kimi returns finish_reason=stop with tool_calls populated in the same response. The finish reason reflects why generation stopped (hit a stop token), not what the response contains.

Our code saw stop, broke out of the inner loop, and silently dropped the tool calls. Since we built our agent framework to retry on incomplete results, the outer loop would restart with the accumulated context, hit the same bug, and retry again. Half a million tokens of retries.

The fix:

if let Some(tool_calls) = &choice.message.tool_calls {

if !tool_calls.is_empty() {

// Tool calls present, execute regardless of finish_reason

let results = self.execute_tools(tool_calls).await?;

messages.push(/* tool results */);

continue;

}

}

if finish_reason == "stop" {

break;

}

Check the response body first. Treat finish_reason as informational. If your agent loop uses finish_reason to control flow, you have this same latent bug with any provider that separates finish semantics from response content. We'd never hit it because we'd never tested with a provider that does.

Tool call IDs also differ between providers (toolu_01ABC... vs call_abc123...), so we had to add ID normalization when sessions crossed provider boundaries. Two providers can accept identical payloads and behave very differently. "OpenAI-compatible" says nothing about finish_reason semantics, ID formats, or how a model handles tool failures.

We fixed the tool call bug and the ID mismatches. Kimi started completing reviews. But our costs were still higher than expected.

Provider switching destroys your prompt cache

Our LLM proxy routes requests through a failover chain with a circuit breaker per provider:

Each provider has a circuit breaker: 3 consecutive failures or a rate limit opens the circuit, and requests skip to the next provider in the chain. After a cooldown, a single probe request tests if the provider recovered.

When Kimi hit its rate limit (lower due to provider capacity), the circuit would open and requests would fail over to Claude. The cost implications were less obvious.

Both providers support prompt caching. Anthropic charges 1/10th the base input rate for cache reads, with a 25% surcharge on the initial cache write. For long-running agent sessions with growing context, cache reads dominate total cost, often 90%+ of the bill.

Caches are per-provider. When the circuit breaker switches from Kimi to Claude mid-session, Claude has never seen this conversation prefix. Cold cache. Full input price, plus the write surcharge to establish a new cache. When it switches back to Kimi a few requests later, that cache has likely expired too.

With lower rate limits, we were switching providers constantly under any real load. Our effective cache hit rate dropped significantly.

This compounds quickly. A single PR review might generate 5-10 concurrent LLM calls across sub-tasks. With multiple PRs in flight, you hit the rate limit constantly, which triggers more failovers, which invalidates more caches. Prompt caching on a single provider at full price can be cheaper than a discounted provider you keep falling off of.

Why we went back to Claude

After a weekend of debugging, we pulled Kimi from orchestration. We run Claude across our workloads at varying model tiers, matching capability to task criticality. There are parts of our pipeline that don't need frontier capabilities and could benefit from pre-processing, smarter filtering, or cheaper models. We're still exploring those.

The 5x cheaper model ended up costing us more. Between the infinite loop bug, cache invalidation from provider switching, and engineering time spent debugging, the savings never materialized. Models keep getting cheaper and more capable with each generation, and the cost gap that motivates provider switching tends to narrow on its own. If you're evaluating alternatives, start with your failure modes. Run your real workload through the candidate, not synthetic benchmarks. Measure cost per successful outcome. And model your cache behavior under the conditions your system actually operates in, not just steady-state.

Gitar runs multiple specialized AI agents on every PR. They review code, fix CI failures, execute custom rules, and respond to developer feedback in-thread. Your team doesn't have to build the harness, the failover, or the cost controls. You can try it today at gitar.ai.